Deploying my first model to HuggingFace spaces

Steps to get the hugging face gradio application to work

- toc: true

- badges: true

- comments: true

- categories: [sound, hugging face, fastai]

- image: images/Hugging-Face.png

Know before getting started

This notebook code is the same used to create my app.py file you can find in UrbanSounds8k spaces repo.

Creating a space is simple and intuitive at Hugging Face. You will need a "Create a new space" and "Create a new model repository" at huggingfaces. You will find the interface to create a new model repository (repo) in your profile settings.

If you upload your model artifacts into your spaces repository, you will run into 404 or 403 series errors. Once you create a model repo, the installation steps of Hugging Face will have an install git lfs in the set of instructions specific to the location you clone this empty repo.

If you upload your model artifacts into your spaces repository, you will run into 404 or 403 series errors. Once you create a model repo, the installation steps of Hugging Face will have an install git lfs in the set of instructions specific to the location you clone this empty repo.

Add in that repo before copying your model the *.pkl file from the earlier step; ensure you track pkl files as a type of file managed by Git LFS

git lfs track "*.pkl"

Tip: Note what is my spaces repo and what is in my model repo

If you miss this step you will run into 403 errors as you execute this line:

model_file = hf_hub_download("gputrain/UrbanSound8K-model", "model.pkl")

Back to spaces repo, for which I have specific requirements.txt to be able to load librosa modules to get my inference function to work. In my example, I also needed to get my labeller function to get my model to work. This requirements.txt is my spaces repo.

#collapse-hide

import gradio

from fastai.vision.all import *

from fastai.data.all import *

from pathlib import Path

import pandas as pd

from matplotlib.pyplot import specgram

import librosa

import librosa.display

from huggingface_hub import hf_hub_download

from fastai.learner import load_learner

Matplotlib is building the font cache; this may take a moment.

#collapse-hide

ref_file = hf_hub_download("gputrain/UrbanSound8K-model", "UrbanSound8K.csv")

model_file = hf_hub_download("gputrain/UrbanSound8K-model", "model.pkl")

Downloading: 0%| | 0.00/494k [00:00<?, ?B/s]

Downloading: 0%| | 0.00/87.6M [00:00<?, ?B/s]

Labeller function

My labeller function and loading of the model code are below. Model loaded and vocabulary on classes retrieved from the model object.

#collapse-hide

df = pd.read_csv(ref_file)

df['fname'] = df[['slice_file_name','fold']].apply (lambda x: str(x['slice_file_name'][:-4])+'.png'.strip(),axis=1 )

my_dict = dict(zip(df.fname,df['class']))

def label_func(f_name):

f_name = str(f_name).split('/')[-1:][0]

return my_dict[f_name]

model = load_learner (model_file)

labels = model.dls.vocab

Helpful external facing markdown text

This bit of code allows some text markdown to appear in your demo. It takes standard markdown for tables and text formats, which is very useful to provide some descriptive elements to your demo, from my spaces repo.

#collapse-hide

with open("article.md") as f:

article = f.read()

Inference

Interface options

The interface option allows for some text and examples objects which reside in your spaces repo.

#collapse-hide

interface_options = {

"title": "Urban Sound 8K Classification",

"description": "Fast AI example of using a pre-trained Resnet34 vision model for an audio classification task on the [Urban Sounds](https://urbansounddataset.weebly.com/urbansound8k.html) dataset. ",

"article": article,

"interpretation": "default",

"layout": "horizontal",

# Audio from validation file

"examples": ["dog_bark.wav", "children_playing.wav", "air_conditioner.wav", "street_music.wav", "engine_idling.wav",

"jackhammer.wav", "drilling.wav", "siren.wav","car_horn.wav","gun_shot.wav"],

"allow_flagging": "never"

}

Pipeline helper function

With my Urban Sound, 8K example, I have a custom transformation that takes the audio wav file and puts this through librosa to create a Melspectrogram. I am using a fixed temporary image as temp.png for my inference object.

#collapse-hide

def convert_sounds_melspectogram (audio_file):

samples, sample_rate = librosa.load(audio_file) #create onces with librosa

fig = plt.figure(figsize=[0.72,0.72])

ax = fig.add_subplot(111)

ax.axes.get_xaxis().set_visible(False)

ax.axes.get_yaxis().set_visible(False)

ax.set_frame_on(False)

melS = librosa.feature.melspectrogram(y=samples, sr=sample_rate)

librosa.display.specshow(librosa.power_to_db(melS, ref=np.max))

filename = 'temp.png'

plt.savefig(filename, dpi=400, bbox_inches='tight',pad_inches=0)

plt.close('all')

return None

Pipeline predict function

The predict function uses this temp.png file for its predictions, and the labels_probs object has a list of class probabilities.

#collapse-hide

def predict():

img = PILImage.create('temp.png')

pred,pred_idx,probs = model.predict(img)

return {labels[i]: float(probs[i]) for i in range(len(labels))}

return labels_probs

The Pipeleine function

My inference pipeline is done in this end2endpipeline object that converts the sound and returns the output of my predict function.

#collapse-hide

def end2endpipeline(filename):

convert_sounds_melspectogram(filename)

return predict()

Launch options

I call the gradio Interface object referencing my inference pipeline displaying input types and output classes.

#collapse-output

demo = gradio.Interface(

fn=end2endpipeline,

inputs=gradio.inputs.Audio(source="upload", type="filepath"),

outputs=gradio.outputs.Label(num_top_classes=10),

**interface_options,

)

/home/ec2-user/anaconda3/envs/pytorch_p38/lib/python3.8/site-packages/gradio/deprecation.py:40: UserWarning: `optional` parameter is deprecated, and it has no effect

warnings.warn(value)

/home/ec2-user/anaconda3/envs/pytorch_p38/lib/python3.8/site-packages/gradio/deprecation.py:40: UserWarning: The 'type' parameter has been deprecated. Use the Number component instead.

warnings.warn(value)

/home/ec2-user/anaconda3/envs/pytorch_p38/lib/python3.8/site-packages/gradio/deprecation.py:40: UserWarning: `layout` parameter is deprecated, and it has no effect

warnings.warn(value)

Gradio local or public endpoint

The launch options launches the gradio UI. When using AWS I wasn't able to get this to work with the local URL, however if you use the share=True you get a gradio URL that works.

#collapse-hide

launch_options = {

"enable_queue": True,

"share": False,

#"cache_examples": True,

}

demo.launch(**launch_options)

Running on local URL: http://127.0.0.1:7861/

To create a public link, set `share=True` in `launch()`.

(<gradio.routes.App at 0x7f671108ce50>, 'http://127.0.0.1:7861/', None)

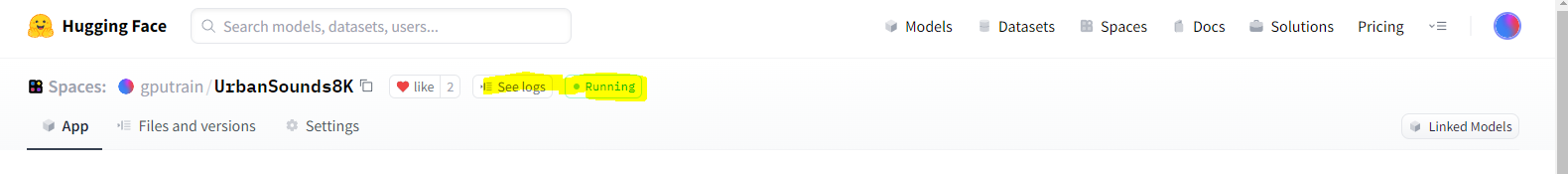

Hugging Face

The final end point once you assemble all this work through the logs (to resolve errors) is here

Comments ()